This famous quote, usually attributed to Tony Hoare, was popularized by Donald Knuth's book Structured Programming with go to Statements, published in 1974. That was 49 years ago.

In dog years, that's about 200 human years. In software-industry years, it's more like a millennium. Computers have changed drastically since then.

Does this rule still apply?

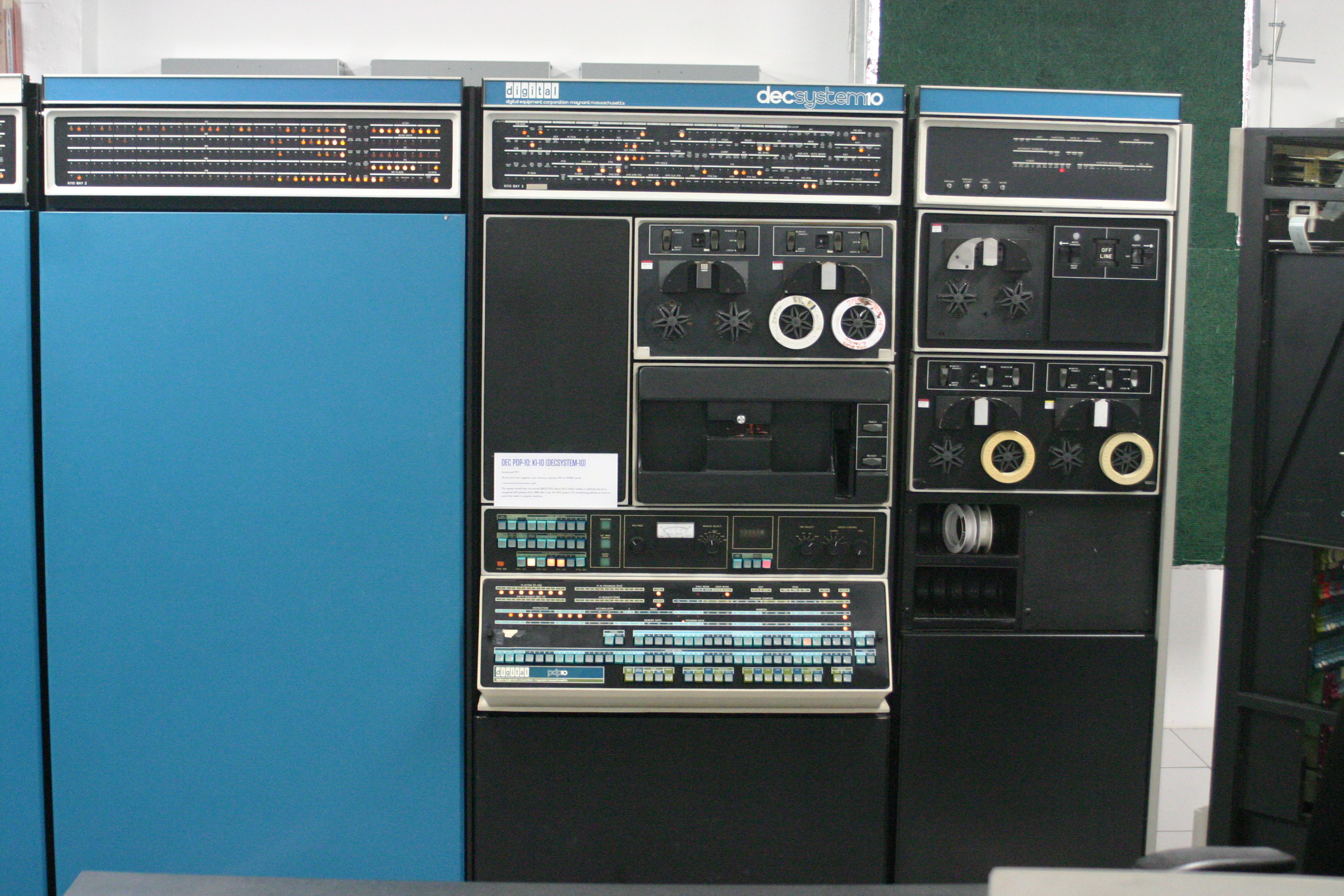

Back then Knuth might have been working with a computer such as the PDP-10 KA10, a machine that offered a maximum of 1152kb of memory, run at about 1mhz, weighted about 870kg and cost over half a million dollars.

In that context it makes sense that programmers would try to optimize everything by default, since every processor cycle and every byte of memory counted.

Fast-forward half a century: hardware is ridiculously fast and dirt cheap and the industry is dominated by CRUD applications (what we, frontend and backend devs, do 99% of the time), which are IO bound rather than CPU bound.

Furthermore, the few CPU optimizations we do need, such as with React's virtual DOM, are hidden away from every-day developers inside libraries and frameworks developed by a few specialized ones.

CPU optimizations are largely unnecessary now, and thus ignored by default by today's programmers.

And yet, still this lesson comes back to haunt us. How can it be?

It's simple: any premature investment of effort and time is generally a bad idea. This applies very much to premature architecture, for example — for pieces of software which may get discarded a week later; or overly-enthusiastic early design discussions, which can easily spiral out of control and evolve into bike-shedding.

Finally, here's an extract of Structured Programming with go to Statements — the two paragraphs surrounding the famous quote:

There is no doubt that the grail of efficiency leads to abuse. Programmers waste enormous amounts of time thinking about, or worrying about, the speed of noncritical parts of their programs, and these attempts at efficiency actually have a strong negative impact when debugging and maintenance are considered. We should forget about small efficiencies, say about 97% of the time: premature optimization is the root of all evil.

Yet we should not pass up our opportunities in that critical 3%. A good programmer will not be lulled into complacency by such reasoning, he will be wise to look carefully at the critical code; but only after that code has been identified. It is often a mistake to make a priori judgments about what parts of a program are really critical, since the universal experience of programmers who have been using measurement tools has been that their intuitive guesses fail.